Beyond the Prompt: A Deep Dive into Context Engineering, the Future of AI

Move beyond prompt engineering. Learn what Context Engineering is, why it's the future of AI, and how to build smarter, more reliable LLM applications with advanced techniques like RAG and AI agents.

In the rapidly evolving world of artificial intelligence, the conversation is shifting from a narrow focus on "prompt engineering" to a more comprehensive and powerful discipline: Context Engineering. While crafting the perfect prompt was our initial gateway to harnessing the power of Large Language Models (LLMs), we're now realizing that the true potential of AI is unlocked not just by what we ask, but by the entire universe of information we provide.

This article offers a comprehensive exploration of context engineering. We will delve into what it is, why it represents a monumental leap from simple prompt engineering, and how it's being implemented to create more intelligent, reliable, and "magical" AI systems.

From Asking Better Questions to Building Smarter Worlds: The Evolution to Context Engineering

For a time, "prompt engineering" was the definitive skill in AI. It was the art of finding the magic words to coax the desired output from a model. However, as our ambitions have grown from simple chatbots to complex, autonomous AI agents, the limitations of a single, static prompt have become clear.

What is Context Engineering?

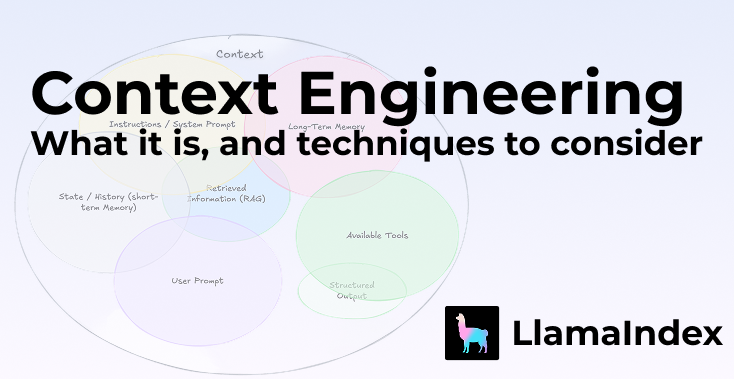

Context engineering is the discipline of designing, architecting, and optimizing the entire information ecosystem that an AI model accesses to perform a task. Think of it as moving from being a person asking a question to being an intelligence chief who provides a comprehensive briefing dossier. As prominent AI researcher Andrej Karpathy puts it, "context engineering is the delicate art and science of filling the context window with just the right information for the next step." - Source

This engineered context is a dynamic and multifaceted information stream that can include:

- Instructions and System Prompts: The foundational rules that define the AI's persona, objectives, and constraints.

- User Input: The immediate query or task from the user.

- Conversational History (Short-Term Memory): The preceding turns in a dialogue to maintain a coherent interaction.

- Retrieved Information (RAG): Data dynamically pulled from external knowledge bases, databases, or the internet to provide factual, up-to-date information.

- Available Tools: Definitions of functions or APIs the AI can use to interact with the outside world, like sending emails or checking calendars.

- Persistent Knowledge (Long-Term Memory): User preferences and key facts learned across multiple interactions.

Prompt engineering, therefore, is a crucial subset of context engineering. It focuses on crafting the user input and instructions, while context engineering is the broader practice of orchestrating all these informational elements.

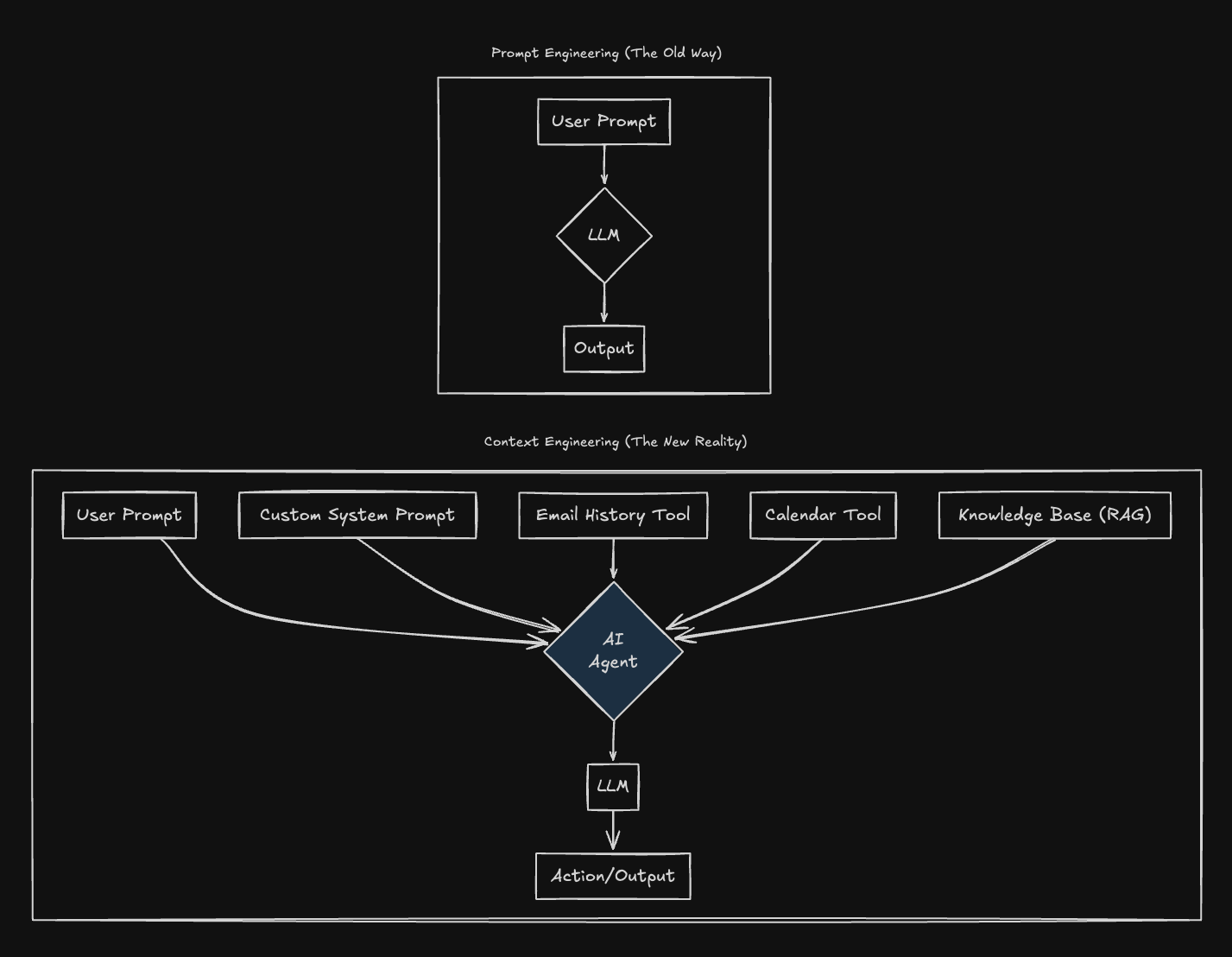

Visualizing the Difference: Prompt vs. Context Engineering

A simple diagram can illustrate this evolution:

Why Context Engineering is the Linchpin of Modern AI

The shift to context engineering is driven by the need for more capable, reliable, and scalable AI applications.

- Reduces Hallucinations and Improves Accuracy: By grounding the LLM in verifiable, external data through techniques like Retrieval-Augmented Generation (RAG), context engineering significantly reduces the likelihood of the model generating false or misleading information.

- Enables Complex, Multi-Step Tasks: AI agents that can perform long-running tasks require a managed "memory" of past actions and information. Context engineering provides this working memory, allowing the agent to maintain state and reason through complex workflows.

- Delivers True Personalization: To offer a genuinely personalized experience, an AI needs to remember user preferences and past interactions. Context engineering builds the systems that manage this persistent user data.

- Unlocks Specialized Expertise: A generic LLM can be transformed into a domain-specific expert by providing it with the right context. For instance, a legal AI assistant becomes powerful when it has access to relevant case law and statutes.

- Moves AI from "Demo" to "Product": Many AI applications feel like impressive but brittle demos. The difference between a cheap demo and a "magical" product is often the quality of the context provided. Robust context engineering is what makes AI reliable enough for production systems.

Core Pillars of Context Engineering in Practice

Effective context engineering revolves around four key strategies for managing the information within the LLM's limited context window:

- Write: This involves persisting information outside the immediate context window, such as in a "scratchpad" or long-term memory, that the agent can refer to later. This is crucial for tasks where an agent needs to take notes or remember facts across sessions.

- Select: This is the art of dynamically pulling the most relevant information into the context window at the right time. This includes retrieving documents via RAG or providing the agent with only the tools relevant to its current sub-task.

- Compress: Given the finite size of context windows, it's essential to retain only the most critical information. This can involve summarizing past parts of a conversation or using smaller, specialized models to distill information before it's passed to the main reasoning model.

- Isolate: For very complex problems, it can be effective to break them down and have an agent (or multiple agents) work on sub-tasks in isolated contexts to prevent interference. This ensures focus and can improve overall performance.

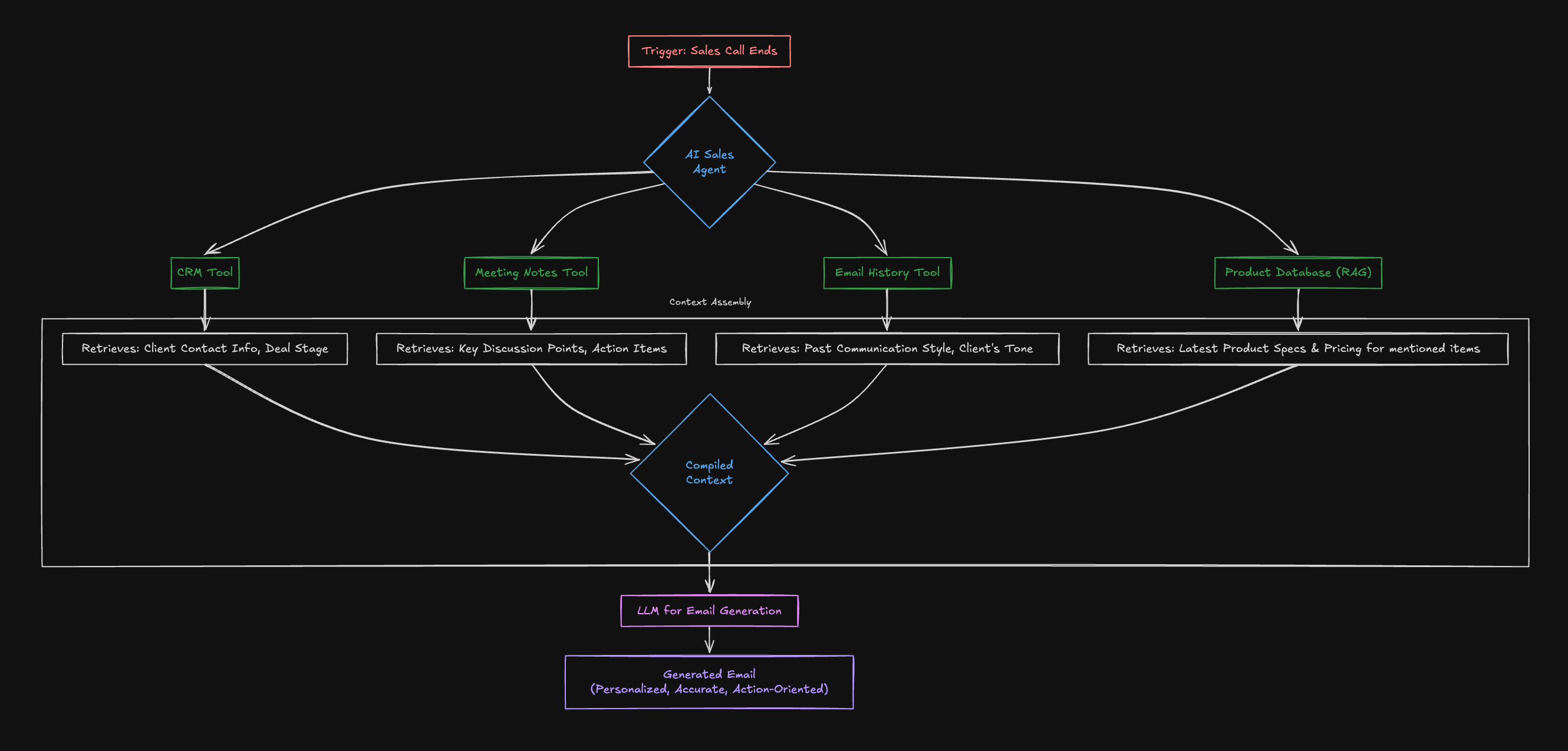

A Practical Use Case: The AI-Powered Sales Assistant

Let's consider an AI sales assistant designed to draft a follow-up email after a client call.

Without Context Engineering (Simple Prompt):

Prompt: "Write a follow-up email to a potential client."

Result: A generic, unhelpful email.

With Context Engineering:

The AI agent accesses multiple tools to build a rich context before calling the LLM.

Here is a diagram illustrating this workflow:

This context-rich approach allows the AI to generate a highly personalized and effective email that references specific discussion points, includes correct client details, and links to the relevant product information.

Prompts for AI-Generated Diagrams and Visuals

To further explore these concepts, use the following prompts with AI image generation tools:

- For the concept of Context Engineering: "An illustration of a central, glowing brain (the LLM) connected by luminous data streams to various sources: a library (knowledge base), a calendar, a contact book, and a toolbox. The overall image should convey a sense of intelligent orchestration and a rich information ecosystem."

- For an AI Agent: "A sleek, futuristic robot collaborating with a human at a holographic interface. The interface displays interconnected nodes of information, representing the agent's access to diverse contextual data like charts, documents, and communication logs. The tone is optimistic and collaborative."

- Prompt vs. Context: "A split-panel image. On the left, labeled 'Prompt Engineering,' a person whispers a single sentence into a large, simple keyhole. On the right, labeled 'Context Engineering,' a person assembles a complex, multi-part key made of data, tools, and documents to unlock a sophisticated, ornate door."

The Future is Context-Aware

Mastering context engineering is becoming the number one job for engineers building sophisticated AI systems. It represents a fundamental shift from simply talking to an AI to thinking with an AI. The organizations and individuals who excel at designing these rich information ecosystems will be the ones who build the next generation of truly intelligent and transformative AI applications. The era of the simple prompt is over; the future of AI is context-aware.

References: